Enhanced methodology and tools for system performance evaluation

Along with the definition of the future GNSS systems and missions, the objective of this activity is the definition of a new methodology for performance assessment at system level complementary to the typical macro-models currently used, in line with the evolution of the services and the new system capabilities. This methodology is supposed to support realistic assessment of more complex services delivered in challenging environment taking into account also the time and space dependency. The activity also includes the design, development and testing of a tool in line with the proposed methodology.

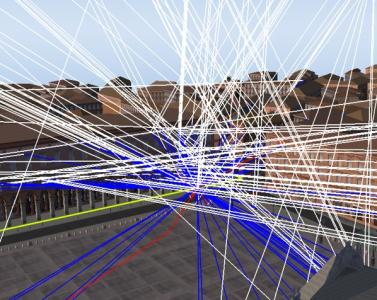

The performance of the future GNSS systems will be driven by more and more demanding applications asking for the ultimate accuracy in the most challenging scenarios and under every condition. To reply to this need, services are becoming more and more sophisticated, system features more and more complex (time/space diversity, advanced coding etc.) and new methodology and tools for assessing the performance level are therefore needed, along with the on-going definition of the second generation of GALILEO satellites and an extension of the EGNOS V3 mission together with their enhanced capabilities.In fact, system performance evaluation is often based on standard figures of merit (Weighted Least Mean Square (WLMS) accuracy, Dilution of precision (DOP), DOC, etc.) and on macro-models for the environment which consider a number of assumptions and simplifications. Although valid for well-established performance indicators, these simplifications are normally not suitable for analysis of future services, applications. In particular, the description of the environment is generally over-simplified and does not adequately consider possible dependencies on the actual obstacles, user location, time, and most GNSS performance are assessed based on a snapshot analysis of system requirements, normally averaged, with very basic visibility profiles. On the other hand, different approaches such as a deterministic ray tracing for all the possible, time-variant, sources of multipath events, are too complex to allow for an assessment on a large scale. An intermediate level of abstraction is therefore necessary, that would include the definition of new synthetic performance indicators to capture for example the effectiveness and the speed of the transport of information to the user, the robustness of the data delivery, the capability of a filtering solution etc. and the modelling of the realistic evolution in both space and time of a given scenario. Specifically, the environmental aspects might include those relevant to blockage/masking, multipath and signal propagation in both the ionosphere and the troposphere. The associated effects on the performance might be estimated at the filtered PVT level (position domain), average time to first fix, time to authenticated fix, average and peak time for key recovery, continuity of given service level, probability of cycle slips and/or loss-of-lock, comparison among different time space diversity schemes, etc. Modelling the RF environment should consider, as a minimum, the effects of (1) fading and multipath, (2) RF interferences and (3) signal propagation in both the ionosphere and the troposphere. The objectives of this activity would be then to define the methodology, the performance indicators and the scenarios, implement them in a tool and analyse the performance of more complex services. The proposed approach will include standard cases from which one could include more or less complexity to focus on a particular study, application, etc. Furthermore, the inclusion of visual tools and graphics as much as possible would help analysis and consolidation of the results. Tasks:- Definition of services/key performance indicators/models/methodology for system performance evaluation - Definition of scenarios for performance assessment - Requirement definition - Candidate architectures for potential tool implementation- Simulation tool design- Simulation tool implementation- Testing and validation of tool- Performance evaluation in the predefined scenarios